From EVs and batteries to autonomous vehicles and urban transport, we cover what actually matters. Delivered to your inbox weekly.

Autonomous vehicles perform well – until the environment turns against them.

Fog, dust, snow, or bright sunlight can throw traditional sensors off. LiDAR scatters in particles. Cameras struggle with glare. Radar lacks the detail to pick up small obstacles. When this happens, autonomy doesn’t just slow down. It fails.

That’s the harsh reality for mining fleets, military vehicles, and port operators, where tough conditions often force humans to take over.

The issue isn’t the AI making decisions, but the sensors feeding it. Without reliable vision in messy real-world environments, autonomy can’t scale.

Meet LEO, the all-weather sensor from Autonomous Knight.

Instead of using separate LiDAR, radar, and thermal units, LEO combines visible, infrared, and thermal imaging into one clean video stream.

It works in tough conditions (fog, smoke, dust, snow, or darkness) where most sensors struggle or fail. And because it runs fusion directly at the edge, it gives you sharp, real-time awareness without overloading your system.

LEO delivers more than hardware. It’s a complete perception platform.

Autonomous Knight pairs the sensor with a full software stack – a developer-friendly SDK, real-time sensor fusion software, and an AI simulation suite built to handle edge cases in messy environments.

It works with open APIs, connects easily to most autonomy stacks – from land vehicles to marine systems – and is hardware-agnostic, so teams can integrate it without overhauling existing setups.

From sensing to simulation, it’s everything needed to build autonomy that works in even the toughest real-world environments.

Autonomous Knight isn’t focused on robotaxis and fleets (for now, at least). It’s building primarily for the industries that need autonomy right now.

Think mining, maritime, agriculture, and defense, all industries where weather, dust, and danger are part of daily operations.

In mines, haul trucks move through thick dust clouds that blind cameras and scatter LiDAR. On ships, fog, rain, and glare make visibility nearly impossible. And keep in mind, these are everyday challenges.

LEO handles them by combining radar, thermal imaging, and AI into a single, rugged perception system. It keeps machines running around the clock, even when other sensors can’t.

Autonomous Knight isn’t your average startup. It’s led by veterans who know autonomy and backed by global names that know how to scale it.

On the partner side, big players are already involved. Volvo Group brought the company into its CampX accelerator. Support from EIT Urban Mobility, Plug and Play, and Berkeley’s SkyDeck adds global reach and technical depth.

With early EU innovation grants, photonics awards, and active pilots in the field, Autonomous Knight is already gaining notable traction.

The company isn’t selling another sensor or chasing consumer apps. It’s offering something more foundational – a smart vision layer that plugs into any autonomy system.

Here’s how the model works:

In short, Autonomous Knight isn’t selling sensors, but providing visibility that systems can trust, and the intelligence to act on it.

In autonomy, the biggest challenge isn’t maps or driving logic, but how well a machine can see.

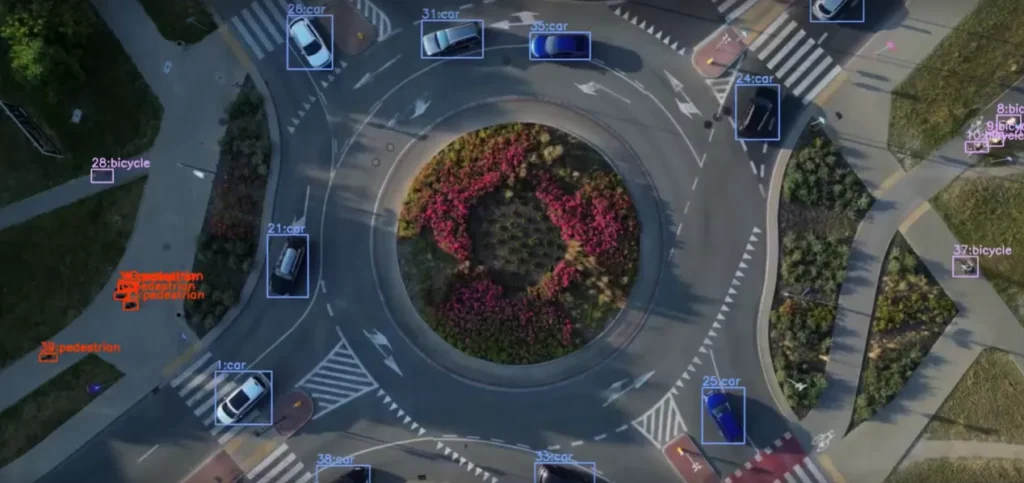

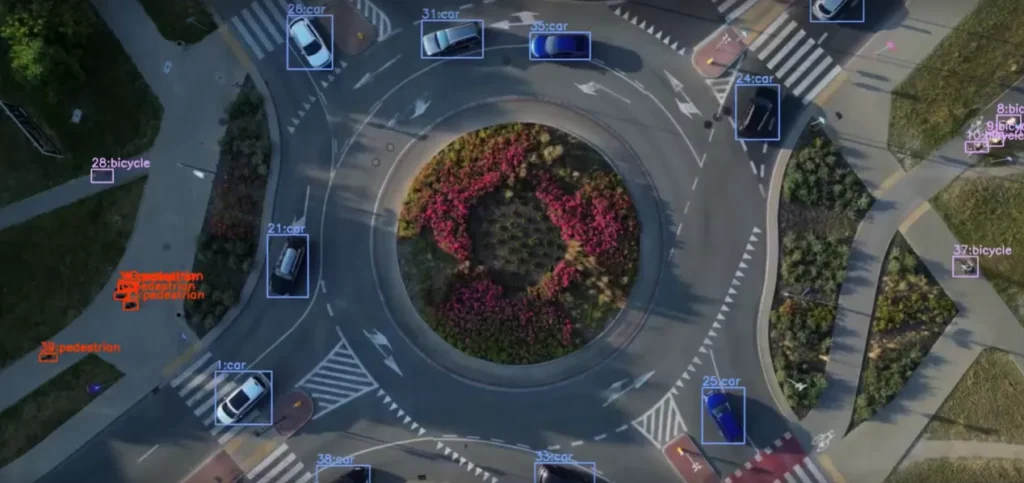

Most systems still falter in tough conditions like fog, glare, snow, or deep shadows. And when sensors fail, so does safety. That’s why multispectral fusion that combines visual, thermal, and radar data is becoming a key advantage.

Autonomous Knight fills that gap. Its tech gives vehicles a clearer picture in real-world environments, helping them make smarter decisions, avoid mistakes, and stay operational when others can’t.

Whether it’s a delivery robot on icy streets or a safety-critical vehicle in low light, better perception is essential. And that’s exactly what they are building.

Autonomous Knight is moving from testing to deployment.

With a seed round underway, the team is focused on scaling its multispectral vision system from proven prototypes to live pilots. Early rollouts are already happening in mining, autonomous shipping, and with potential OEM partners.

And the bigger goal? We can only guess right now, but we see a clear ambition to become the invisible engine behind autonomy, a plug-and-play vision layer that works in any robot, truck, or drone, no matter the conditions.